みなさんこんにちは!

イザナギです!

さてみなさん!Twitterって便利ですよね?

リアルタイムで様々なツイートが見れるので、最新の情報や意見がみれて大変面白いですよね!

そんなTwitterですが、とあるキーワードに対してどんなトピック(話題)が展開しているか大変気になりました。

ですので、今回は「Twitterキーワード検索からトピックを作成」するメソッドを実装してみたいと思います!

開発環境

開発環境は以下の通りです。

- MacOS Mojave(10.14.6)

- pip (20.1.1)

- Python (3.8.5)

用意するもの

TwitterAPIキー

まずはTwitterからツイートを取得するためにTwitterAPIを取得します。

取得の仕方は今回は記載しませんので、以下のサイトなどを参照して取得してみてください。

TwitterAPIキーを取得できたら

- 「Consumer Key」

- 「Consumer Secret」

- 「Access Token」

- 「Access Token Secret」

の4つのキーを用意し、「config.py」など設定ファイルを作成し保存しておきましょう!

今回は「config.py」を作成し以下のようにAPIキーを保存します。

CK = "「Consumer Key」"

CS = "「Consumer Secret」"

AK = "「Access Token」"

AS = "「Access Token Secret」"

これで、TwitterAPIキーの設定は終わりです。

Pythonライブラリのインストール

今回はPythonでREST APIとOAuth認証を使用するため以下のライブラリをインストールします。

pip install requests requests-oauthlib

そして、今回は「gensim」というライブラリも利用しますので以下のコマンドも実行します。

pip install gensim

最新バージョンやフイバージョンなどを使っていると「gensim」が動かない可能性がありますので、対応するバージョンで試してみてください。

以下で確認できます。

gensim

また、形態素解析ツール「 MeCab」と絵文字を削除するためのツール「emoji」もインストールしておきます。

MeCabはインストールが少し手間がかかるので、今回は記事にしません。

以下のサイトなどをみて環境を整えてみてください!

Python3からMeCabを使う - Qiita

「emoji」は以下のコマンドでインストールできます。

pip install emoji

実装

では早速実装してみましょう!

今回実装したコードは以下の通りです。

import csv

import requests

import json

import re

import emoji

import gensim

import MeCab

import pandas as pd

from config import config

from config import config_trend

from requests_oauthlib import OAuth1Session

from gensim.corpora.dictionary import Dictionary

from gensim import corpora

from gensim.models import LdaModel

CK = config.CK

CS = config.CS

AT = config.AT

AS = config.AS

def main():

# 検索するキーワード

keyword = config_trend.KEYWORD

# API試行回数

limit = config_trend.LIMIT

# 除外する単語リスト

sub_list = config_trend.SUBLIST

tweet_list = get_twitter_data(keyword, limit, sub_list)

common_texts = []

for content in tweet_list:

common_texts.append(tokenize(content))

result = latent_dirichlet_allocation(common_texts)

df = pd.DataFrame(result)

df.to_csv('結果保存先パスを指定', header=None, index=None)

print(result)

def get_twitter_data(key_word, repeat, sub_list):

twitter = OAuth1Session(CK, CS, AT, AS)

url = "https://api.twitter.com/1.1/search/tweets.json"

params = {'q': key_word, 'exclude': 'retweets', 'count': '100', 'lang': 'ja',

'result_type': 'recent', "tweet_mode": "extended"}

result_list = []

break_flag = 0

mid = -1

for i in range(repeat):

params['max_id'] = mid # midよりも古いIDのツイートのみを取得する

res = twitter.get(url, params=params)

if res.status_code == 200: # 正常通信出来た場合

tweet_ids = []

timelines = json.loads(res.text) # レスポンスからタイムラインリストを取得

for line in timelines['statuses']:

tweet_ids.append(int(line['id']))

text = shape_text(line['full_text'], sub_list)

result_list.append(text)

if len(tweet_ids) > 0:

min_tweet_id = min(tweet_ids)

mid = min_tweet_id - 1

else:

break_flag = 1

break

# 終了判定

if break_flag == 1:

break

else:

print("Failed: %d" % res.status_code)

break_flag = 1

print("ツイート取得数:%s" % len(list(set(result_list))))

return list(set(result_list))

def latent_dirichlet_allocation(common_texts):

dictionary = Dictionary(common_texts)

# LdaModelが読み込めるBoW形式に変換

corpus = [dictionary.doc2bow(text) for text in common_texts]

num_topics = config_trend.COUNTTOPIC

lda = LdaModel(corpus, num_topics=num_topics)

topic_words = []

for i in range(num_topics):

tt = lda.get_topic_terms(i, config_trend.COUNTTOPICNUM)

topic_words.append([dictionary[pair[0]] for pair in tt])

result_topic = []

for topic in topic_words:

result_topic = result_topic + topic

topics = list(dict.fromkeys(result_topic))

result = []

for topic in topics:

if not bool(re.search(r'\d', topic)):

result.append(topic)

return result

def tokenize(text):

# MeCabでの形態素解析

tagger = MeCab.Tagger('-d /usr/local/lib/mecab/dic/mecab-ipadic-neologd')

key = tagger.parse(text)

output_words = []

for row in key.split("\n"):

word = row.split("\t")[0]

if word == "EOS":

break

else:

pos = row.split("\t")[1]

slice = pos.split(",")

if len(word) > 2:

if slice[0] == "名詞":

if slice[1] == "固有名詞":

if slice[2] == "人名":

continue

elif slice[2] == "形容動詞語幹":

continue

else:

output_words.append(word)

return output_words

def shape_text(line, sub_list):

text = "".join(line.splitlines())

text = re.sub(r'[^ ]+\.[^ ]+', '', text)

# 絵文字を削除

text = ''.join(c for c in text if c not in emoji.UNICODE_EMOJI)

# 全角記号削除

text = re.sub(r'[︰-@]', '', text)

# 半角記号削除

text = re.sub(re.compile("[!-/:-@[-`{-~]"), '', text)

for sub_text in sub_list:

text = re.sub(sub_text, '', text)

return text

main()

「get_twitter_data」メソッド

「get_ twitter_data」でキーワード検索(https://api.twitter.com/1.1/search/tweets.json)にアクセスし、ツイートを取得しています。

パラメーターとして「"tweet_mode": "extended"」を追加することで、ツイートを全文取得できます。

また、パラメーターにparams["max_id"を指定することで、制限を超えるツイートの取得が可能になります。

*TwitterAPIは試行制限回数が決められているので、注意して繰り返し処理を行なってください!2020/11/9現在は15分ごとに180回です。

「latent_dirichletal_location」メソッド

ここでトピックスを作成するメソッドを実行しています。

今回はトピックスを作成するためにgensimの「LatentDirichletAllocation(LDA)」使用しています。

Gensim: topic modelling for humans

*LDAについて詳しく知りたい方はこちらを参照してみてください!

「tokenize」メソッド

「tokenize」メソッドにて、MeCabを利用して「名詞」を取得しています。

また、辞書は「mecab-ipadic-neologd」を使用しています。

*「mecab-ipadic-neologd」は週2回は更新される、新語に強い辞書です。Twitterや最新ニュースなどを分析する際には最適な辞書です!

neologd/mecab-ipadic-neologd

「shap_text」メソッド

このメソッドでは、テキストの整形(絵文字削除、不要記号・単語削除)を行っています。

結果

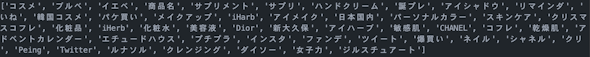

実際にコスメという単語をキーワード検索し、実験してみました!

実験結果としては以下の通りになりました。

コスメ系のトピックを抽出することができました!

画像を見てみると、「ハンドクリーム」「化粧水」などコスメに関連した単語の他にも「ツイート」「パケ買い」などの関連してないっぽい単語も抽出されてしまっていますね。

ここは改善が必要そうです。

まとめ

皆さんいかがだったでしょうか?今回は「TwitterAPI」のキーワード検索で得た結果を「LDA」にかけて、トピックを抽出するプログラムを作って実験してみました。

まだ関係ない単語が複数抽出されるみたいなので、改良が必要そうですね。

また改良を続けていきます!

それでは今回はここで筆を置かせていただきます。

最後まで記事をご覧いただきありがとうございました!